Research Highlights

Over the past decade, the National Science Foundation has supported UC San Diego’s Science of Learning Center known as the Temporal Dynamics of Learning Center or “TDLC”. It was the focus of TDLC to achieve an integrated understanding of the role of time and timing in learning, across multiple scales, brain systems, and social systems. The scientific goal of TDLC was therefore to understand the temporal dynamics of learning, and to apply this understanding to improve educational practice. The following links highlight many of the research projects that were part of TDLC. They are representative of the vast portfolio of remarkable discoveries and an accomplished, ingenious, and effective community of scientists.

2016-2017 Highlights

|

Video Game Training to Improve Eye Gaze Behavior in Children with Autism |

|

|

SIMPHONY Study - Studying the Influence Music Practice Has On Neurodevelopment in Youth (SIMPHONY) |

|

|

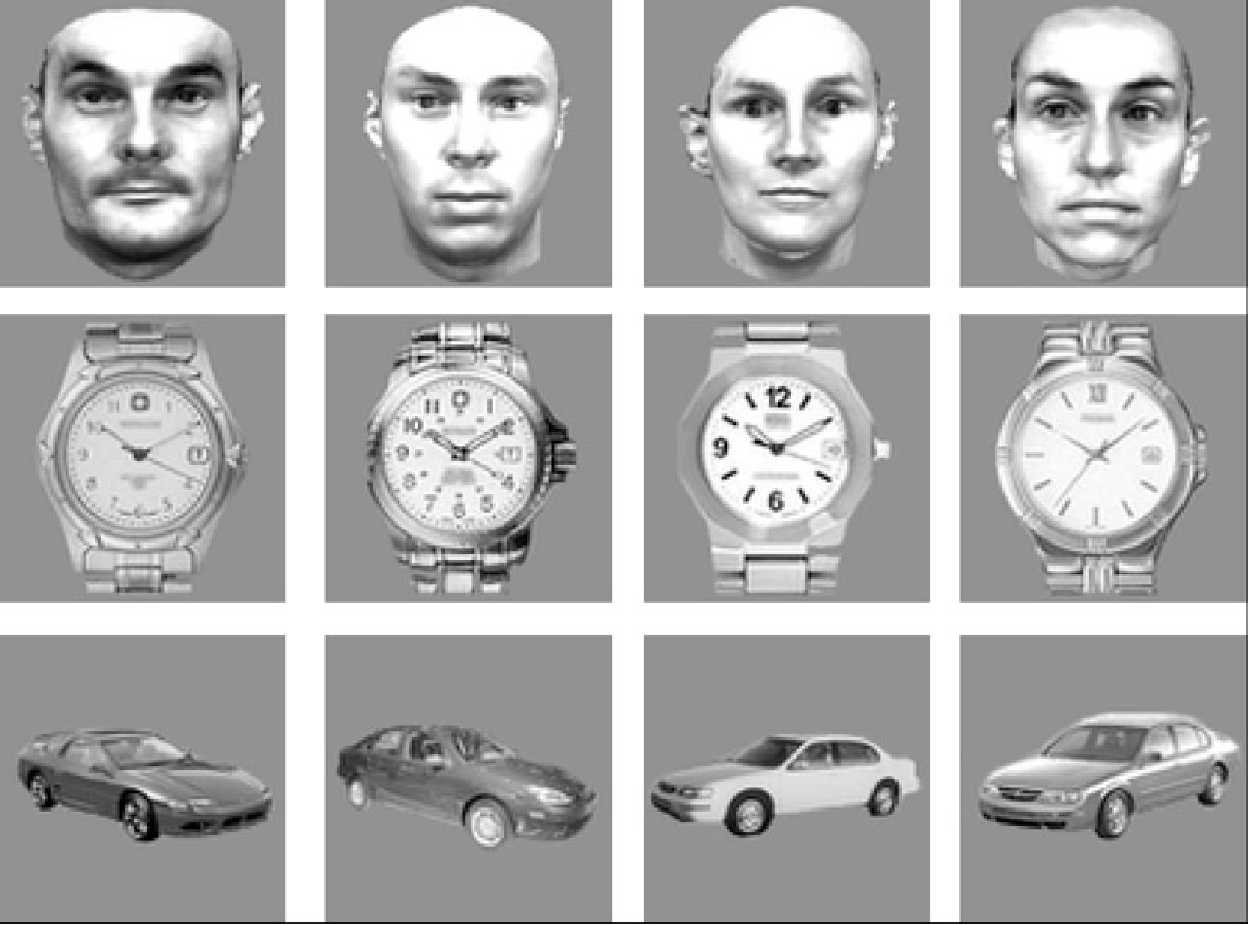

Domain-specific and domain-general individual differences in visual object recognition Year: 2016-2017; Principal Investigator: Isabel Gauthier |

|

|

Using game-based technology to enhance real-world interpretation of experimental results |

|

|

The Science of Learning Research Center researchers teach science through making music, and receives continued support from the National Science Foundation Year: 2016-2017; Principal Investigator: Victor Minces and Alex Khalil |

|

|

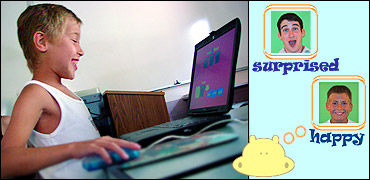

Face Camp: A chance for children to explore the science of face recognition Year: 2016-2017; Principal Investigator: Jim Tanaka |

|

|

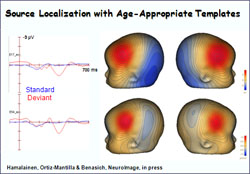

Brain waves during sleep in human infants are differentially associated with measures of language development in boys and girls Year: 2016-2017; Principal Investigator: Sue Peters and April Benasich |

|

|

Neuromodulator Acetylcholine increases the capacity of the brain perceive the world |

|

|

Making Games with Movement Year: 2016-2017 |

|

|

Understanding how the brain represents and processes complex, time-varying streams of sensory information |

2015-2016 Highlights

|

Training Facial Expressions in Autism |

|

|

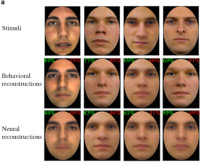

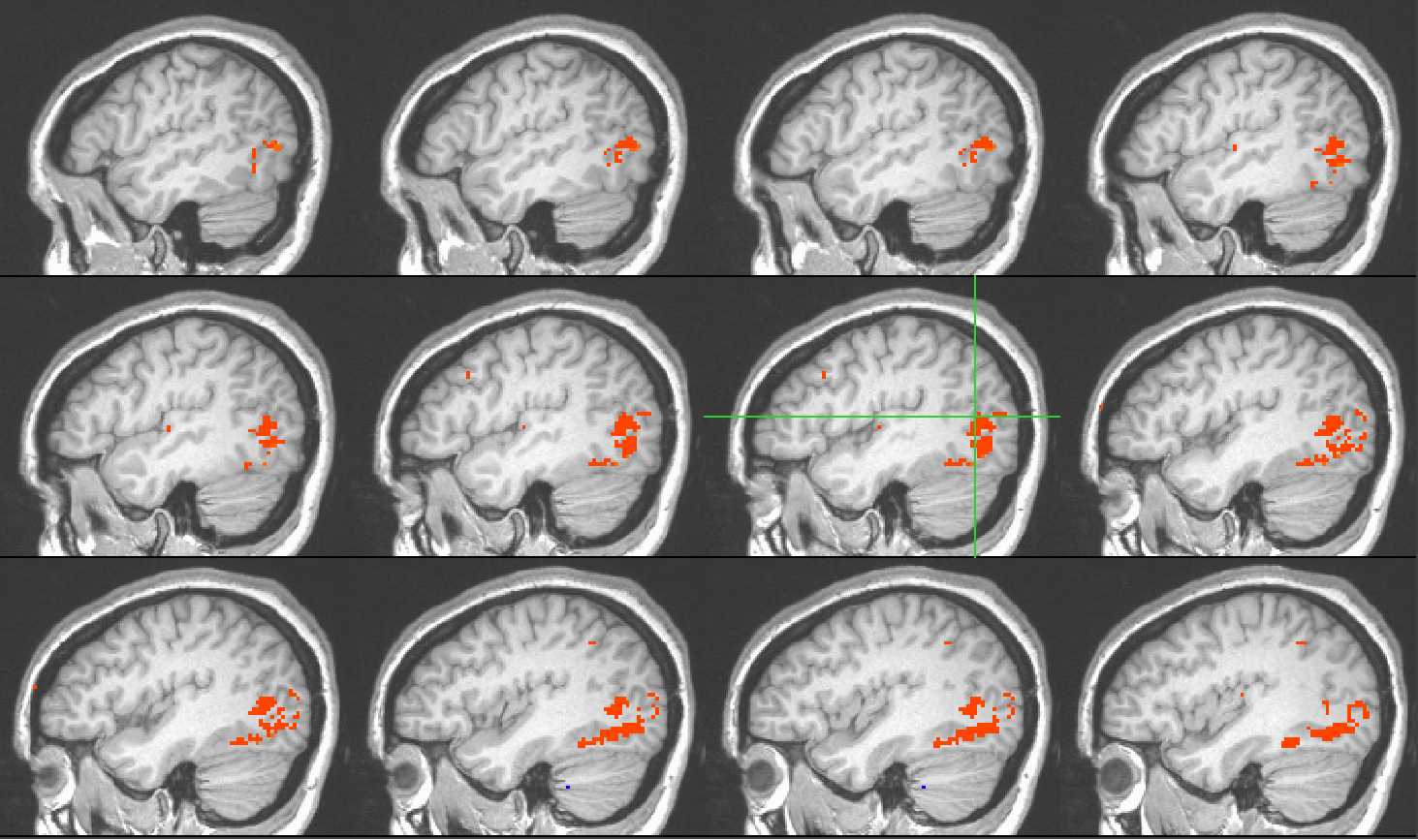

Understanding the neural code that supports the individuation of similar faces

|

|

|

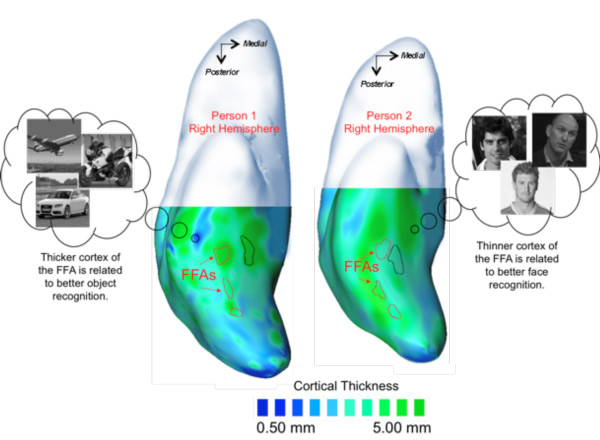

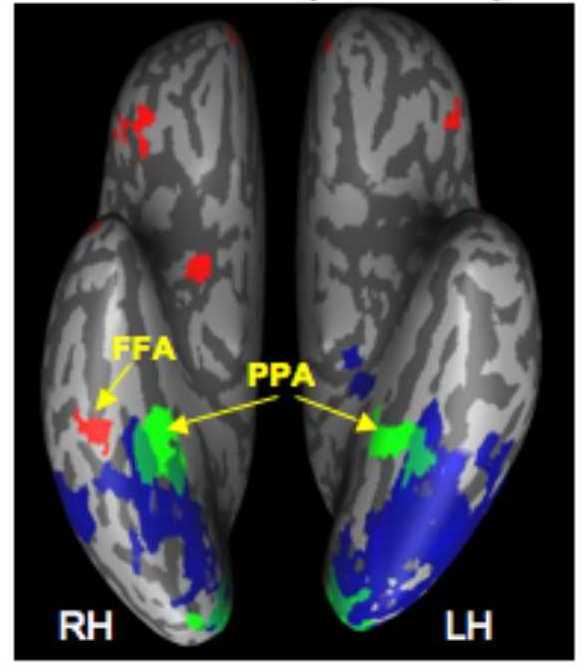

Thickness of Cortical Grey Matter Predicts Face and Object Recognition

|

|

|

the Science of Learning Research Center Researchers Advocate for Science of Learning in Washington DC (2015-16)

|

|

|

Plasticity in Developing Brain:

|

|

|

|

|

|

|

2014-2015 Highlights

|

|

|

|

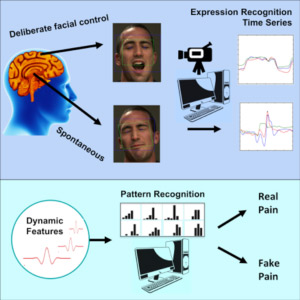

Using Automated Facial Expression Recognition Technology to Distinguish Between Cortical and Subcortical Facial Motor Control

|

|

|

the Science of Learning Research Center's First MOOC Yields a Staggering Number of Students on Coursera!

|

|

|

Cortical Thickness in Fusiform Face Area Predicts Face and Object Recognition Performance

|

|

|

the Science of Learning Research Center Researchers Advocate for Science of Learning in Washington DC (2014)

|

|

|

A New Method Applied to Kinematic Data Reveals Hidden Influences on Reach and Grasp

Trajectories

|

2013-2014 Highlights

|

Personalized Review Improves Students’ Long-Term Knowledge Retention

|

|

|

Face Perception

|

|

|

Neural Systems for the Visual Processing of Words and Faces

|

|

|

Predicting Memory from EEG

|

|

|

Temporal Coding in the Dentate Gyrus

|

|

|

Attention in Children is Related to Interpersonal Timing

|

|

|

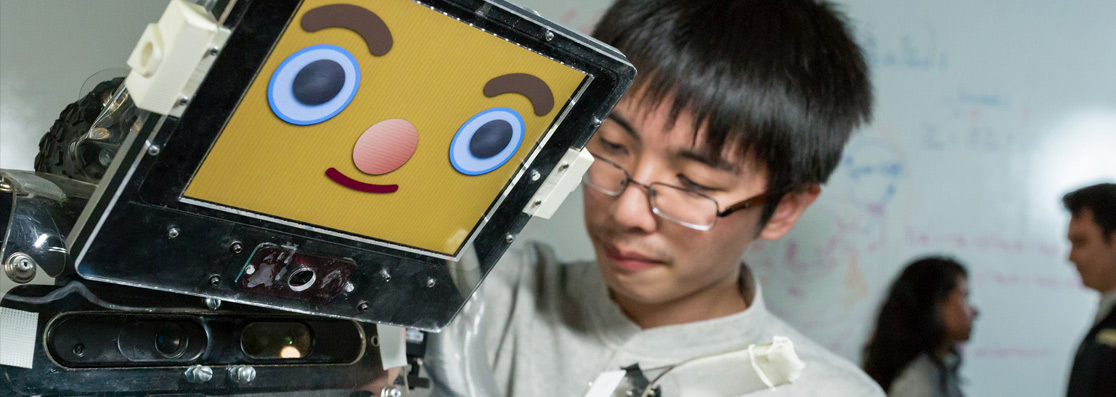

Human-Robot Interaction (HRI) as a Tool to Monitor Socio-Emotional Development

in Early Childhood Education

|

|

Micro-valences: perceiving affective valence in everyday objects New research from Carnegie Mellon University's Center for the Neural Basis of Cognition (CNBC) shows that the brain's visual perception system automatically and unconsciously guides decision-making through something called valence perception. Valence — defined as “the positive or negative information automatically perceived in the majority of visual information” — is a process that allows our brains to quickly make choices between similar objects. The researchers conclude that “everyday objects carry subtle affective valences – ‘micro-valences’ – which are intrinsic to their perceptual representation.”

|

||

Learning to read may trigger right-left hemisphere difference for face recognition Whereas, in this study, adults showed the expected left and right visual field superiority for face and word discrimination, respectively, the young adolescents demonstrated only the right field superiority for words and no field superiority for faces. Although the children's overall accuracy was lower than that of the older groups, like the young adolescents, they exhibited a right visual field superiority for words but no field superiority for faces. Interestingly, the emergence of face lateralization was correlated with reading competence, measured on an independent standardized test, after regressing out age, quantitative reasoning scores and face discrimination accuracy.

|

||

Computer-Based Cognitive and Literacy Skills Training Improves Students' Writing Skills A study conducted at Rutgers University finds that cognitive and literacy skills training improves college students' basic writing skills.

|

2013 and earlier

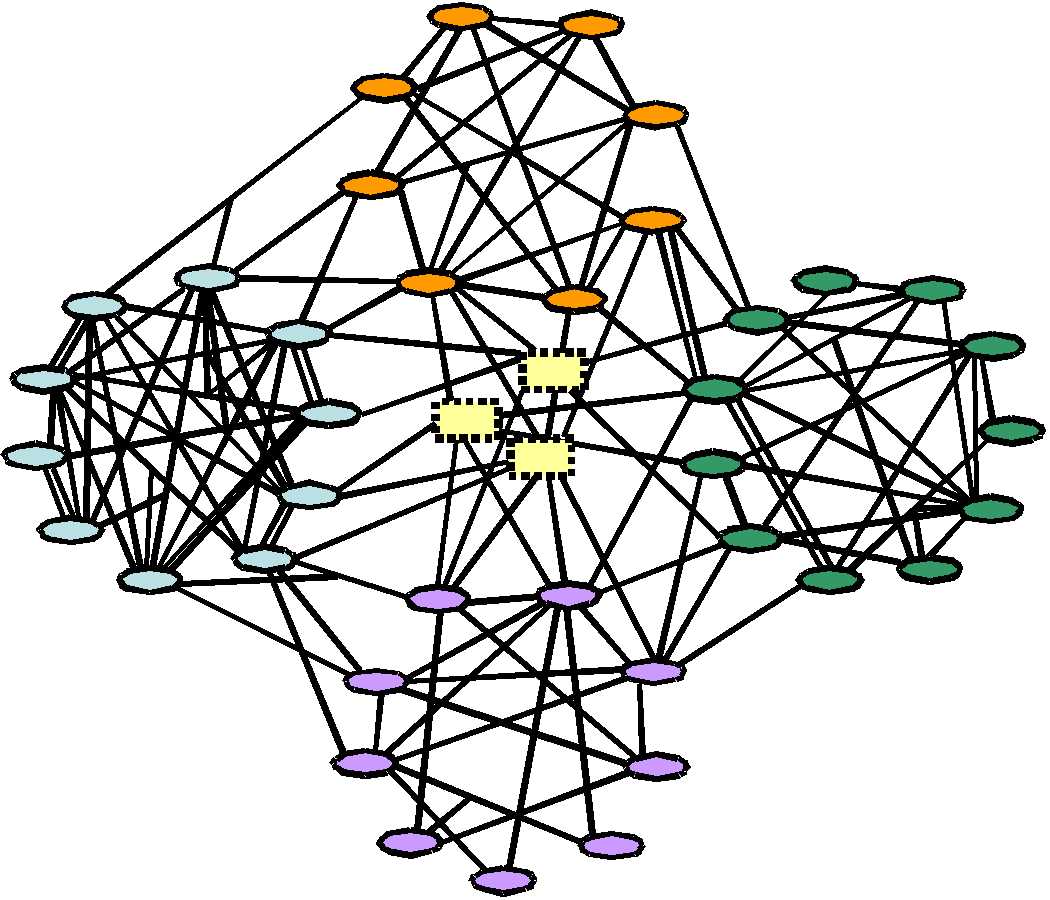

Toward optimal learning dynamics As outlined in a recent Science article coauthored by members of the the Science of Learning Research Center and LIFE centers, transformative advances in the science of learning require collaboration from multiple disciplines, including psychology, neuroscience, machine learning, and education. the Science of Learning Research Center has implemented this approach through the formation of research networks, small interdisciplinary teams focused on a common research agenda.

|

||

A review

of STDP by the Science of Learning Research Center Investigator Feldman is featured in Neuron

|

||

"Sex matters: Guys recognize cars and women recognize birds best"

|

||

|

||

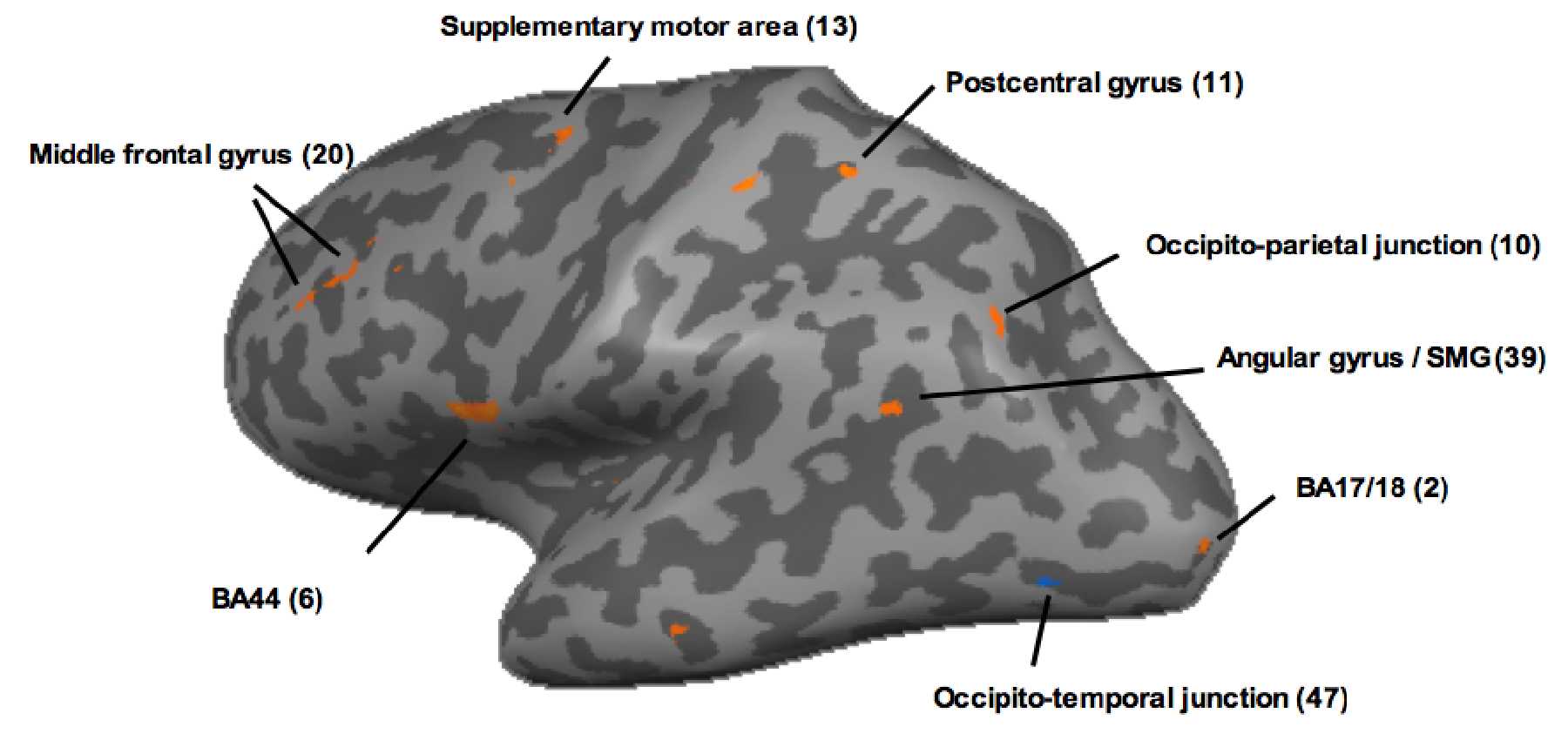

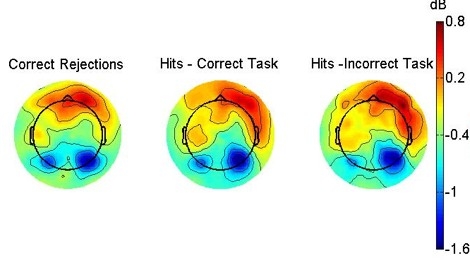

Different kinds of visual learning reflect different patterns of change in the brain

|

||

|

|

Let's Face It! and CERT help autistic children (2012)

|

|

|

|

Early Interventions: Baby Brains May Signal Later Language Problem (2011)

|

|

|

|

The Gamelan Project: The ability of a child to synchronize correlates with attentional performance(2011)

|

|

|

|

Computer-Based Cognitive and Literacy Skills Training Improves Students' Writing Skills(2011) |

|

|

|

the Science of Learning Research Center, Music and the Brain (2011)

|

|

|

|

Partnership between UC San Diego, The Neurosciences Institute, and the San Diego Youth Symphony - Fall 2011.

|

|

|

|

SCCN and Music/Brain Research - 2011 Quartet for Brain and Triothe Science of Learning Research Center investigator Scott Makeig, Director of Swartz Center for Computational Neuroscience (SCCN), is interested in integrated music into his research. He uses the Brain Computer Interface to read emotions and convert those emotions into musical tones.

|

|

|

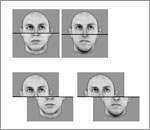

Patients with congenital face blindness outperform controls on

face perception test Individuals born with face-blindness (congenital prosopagnosia), while impaired at recognizing familiar faces and even making perceptual judgments about whether two unknown faces are the same or different, are better than matched controls at detecting similarities/differences between parts of two faces in a composite face comparison task. |

|

|

Holistic Processing Predicts Face Recognition The concept of holistic processing (HP) is a cornerstone of face recognition research. We demonstrate that HP predicts face recognition abilities on the Cambridge Face Memory Test and a perceptual face identification task. Our findings validate a large body of work on face recognition that relies on the assumption that HP is related to face recognition. |

|

|

Inverted Faces are (Eventually) Processed Holistically (in press) Face inversion effects are used as evidence that faces are processed differently from objects. Nevertheless, there is debate about whether processing differences between upright and inverted faces are qualitative or quantitative. |

|

|

Dr. April Benasich: Four new papers in press (November/December 2010)

|

|

|

The Power of Study and Testing Spacing (November 2010) |

|

|

Neurons cast votes to guide decision-making (October 2010) |

|

|

Music helps explain a paradox in research on faces and Chinese characters (2010) |

|

|

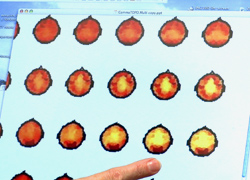

Recognizing Images Using Fixations (2010) |

|

|

The Gamelan Project - Exploring music and temporal perception in children (June 2010) |

|

|

Enhancing facial expression recognition and production in children with autism (May 2010) |

|

|

A computer vision system automatically recognizes facial expressions of students during problem solving (May 2010) |

|

|

Our Rich Cognitive Abilities (February 2010) |

|

| Ah to be an Expert (February 2010) |

||

|

Visual Pathways Fine Tuned over Time (February 2010) |

|

|

Entrainment of Hippocampal Neurons by Theta Rhythm |

|

|

The Wiring is Not Right: Congenital Prosopagnosia | |

|

Adolescents with Autism process faces as wholes but are not sensitive to configuration |

|

| Size of Infant's Amygdala Predicts Language Ability | ||

|

Can You Recognize a Face with a Single Glance? |

|

|

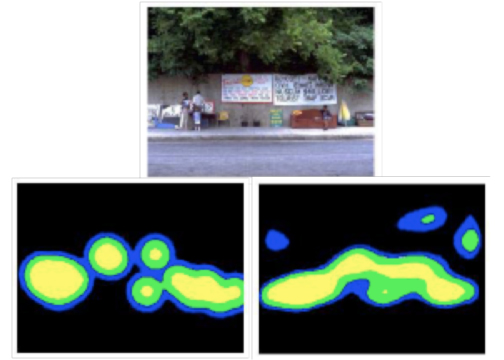

Task-driven salience: Directing Gaze for Visual Search | |

|

Your Lips, Your Eyes, Your Face! | |

The representation of hand actions in auditory sentence comprehension |

||

|

Effect Of Gamma Waves On Cognitive And Language Skills In Children |

|

|

What do we know about the color of men and women?

|

|

|

Machine Perception Lab PhD Student Turns Face Into Remote Control

|

|

|

O'Reilly Workshop on Models of High-Level Vision |

|

Faces Studied as Parts are Processed as Wholes

|

||

|

It's All Chinese to Me |

|

Faces Equally Special in Different Spatial Formats

|

||

NSF Face Camp |

||

|

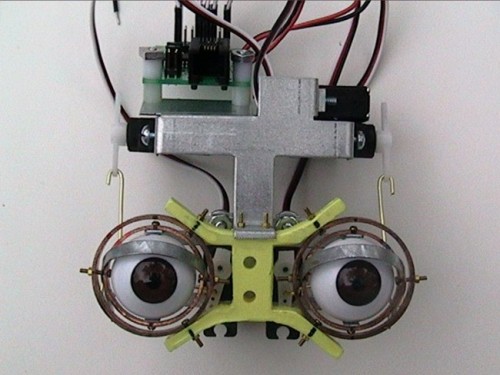

NIMBLE Eyes | |

|

We Have A Memory Advantage for Faces

|

|

| The Musical Brain Sees Faster |

||

The Neural Basis of Audio/Visual Event Perception

|

||

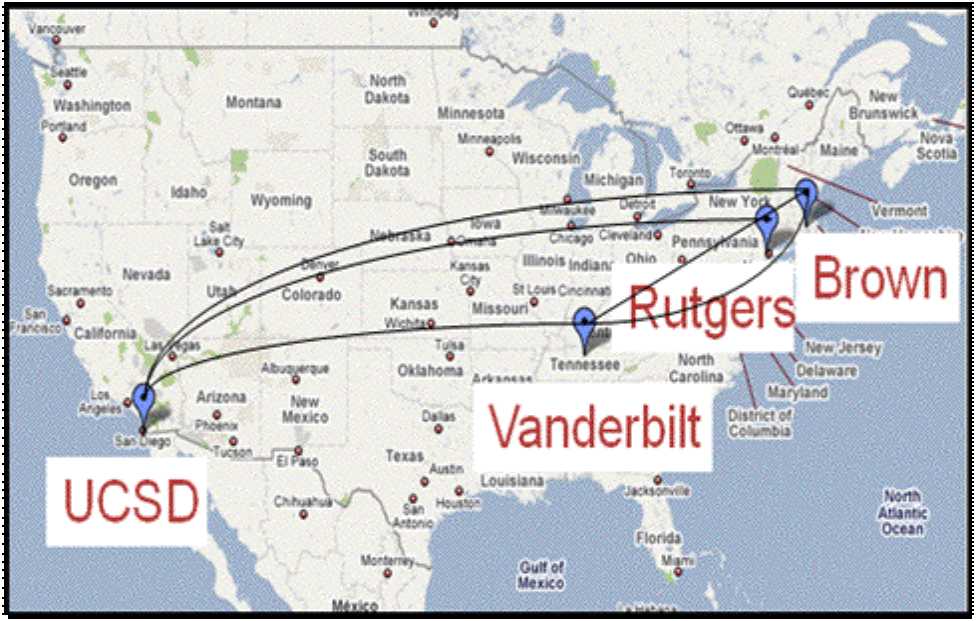

Cross-Country Data Grid

|

||

Brains R Us

|

||

|

Temporal Dynamics Learning Center Promotes Discussions on Collaboration | |

Maturation of Psychological and Neural Mechanisms Supporting Face Recognition

|

||

|

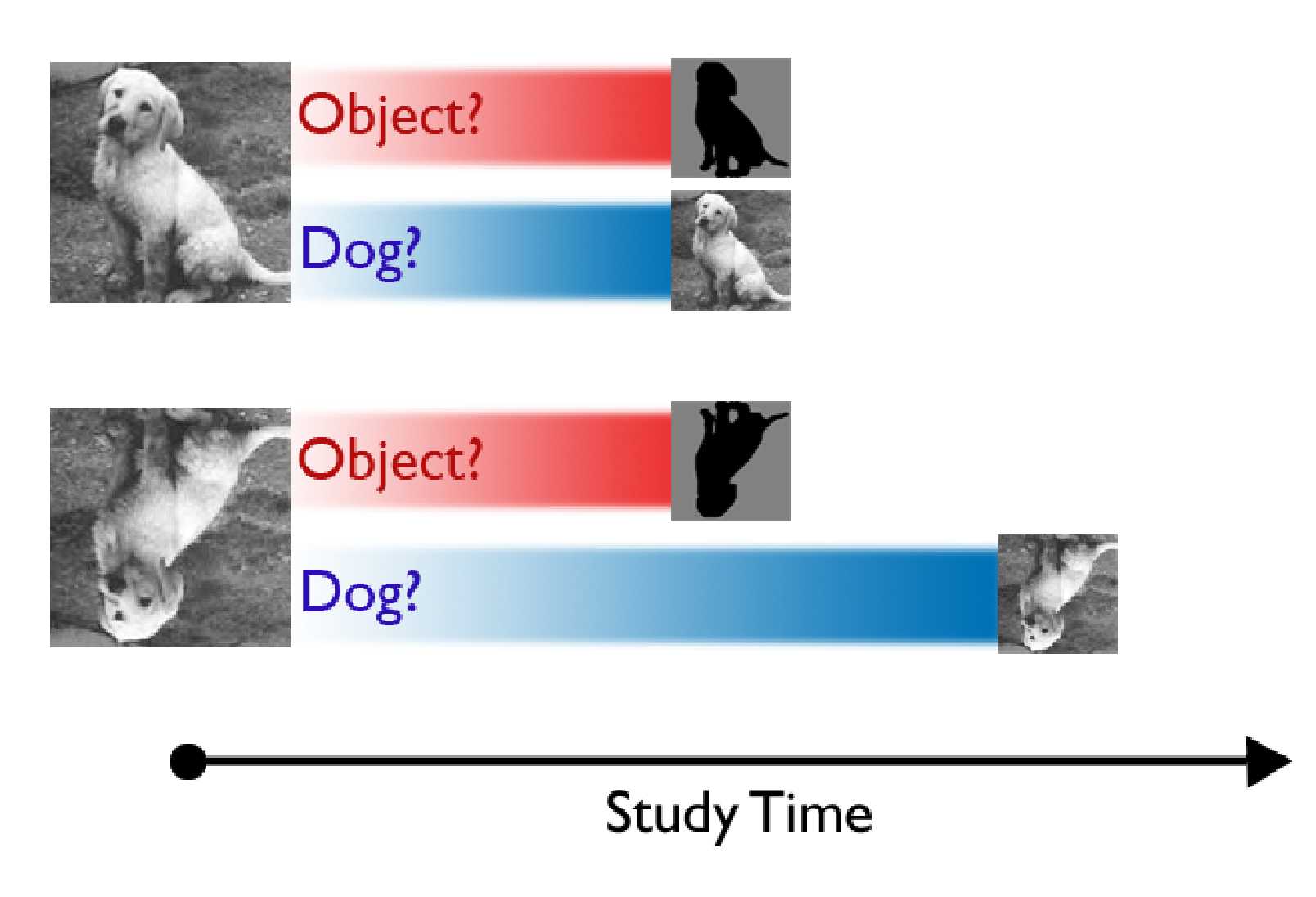

Knowing An Object Is There Does Not Necessarily Mean You Know What It Is

|

|

|

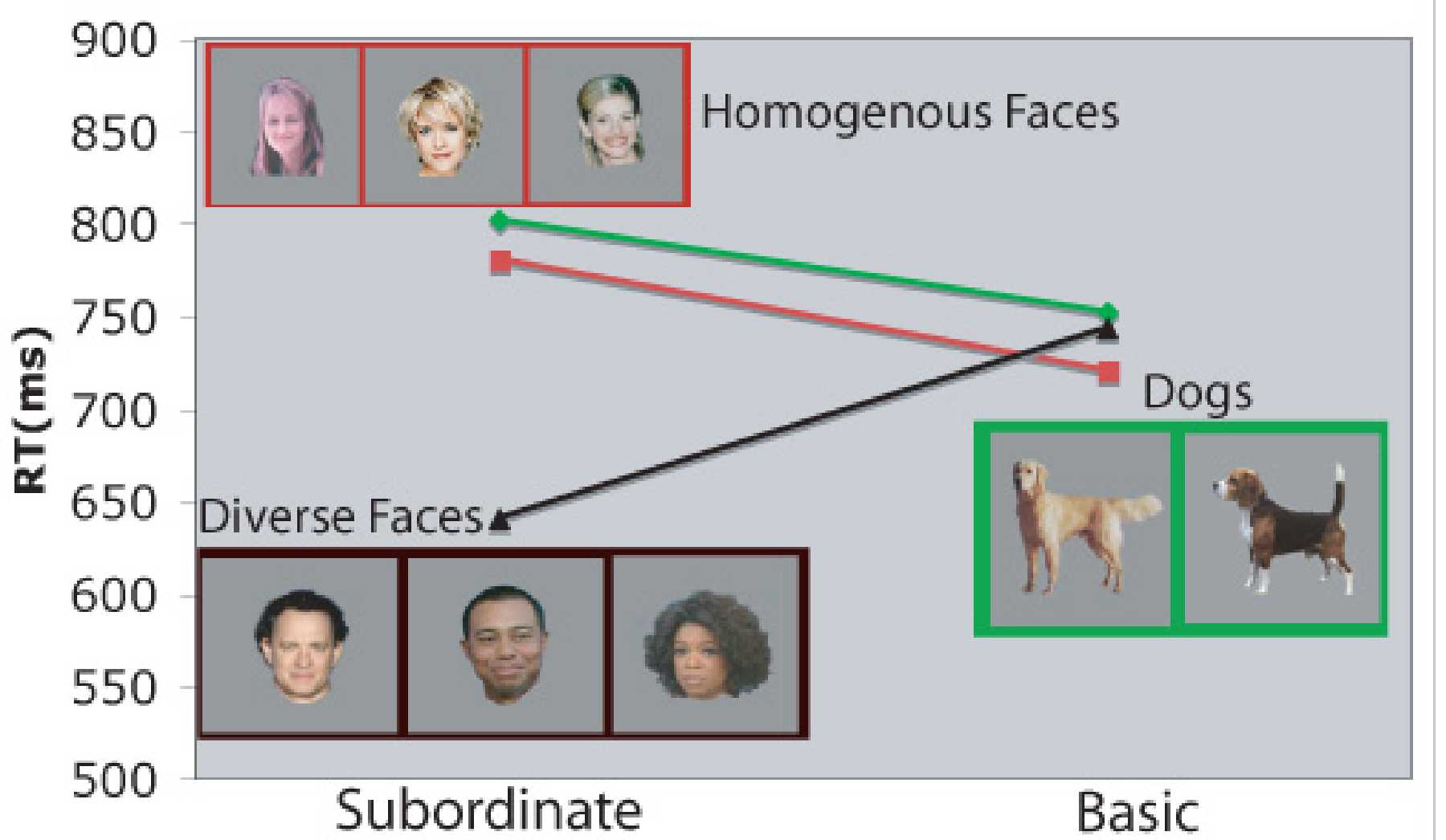

Similar Faces Show Object-like Categorization

|

|

Distribution and Support of Face Recognition Training Software

|

||

Social Interaction and the Dynamics of Learning |

||

Learning to Become an Expert |